If you are learning Big Data and want to explore the Hadoop framework and looking for some excellent courses, then you have come to the right place. In this article, I am going to share some of the best Hadoop courses to learn Hadoop in depth. In the last couple of articles, I have shared some Big Data and Apache Spark resources, which are well received by my readers. After that, a couple of my readers emailed me and asked about some Hadoop resources, like books, tutorials, and courses, which they can use to learn Hadoop better. This is the first article in the series on Hadoop; I am going to share a lot more about Hadoop and some excellent resources in the coming month, like books and tutorials.

Btw, If you don't know, Hadoop is an open-source distributed computing framework for analyzing Big data, and it's been around for some time.

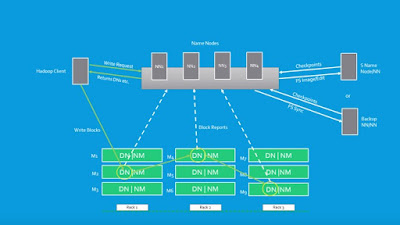

The classic map-reduce pattern which many companies use to process and analyze Big Data also runs on the Hadoop cluster. The idea of Hadoop is simple, to leverage a network of computers to process a massive amount of data by distributing them to each node and later combining individual output to produce the result.

Though MapReduce is one of the most popular Hadoop features, the Hadoop ecosystem is much more than that. You have HDFS, Yarn, Pig, Hive, Kafka, HBase, Spark, Knox, Ranger, Ambari, Zookeeper, and many other Big Data technologies.

Btw, why Hadoop? Why should you learn Hadoop? Well, it is one of the most popular skills in the IT industry today. The average salary for a Big Data developer in the US is around $112,00 per annum, which goes up to an average of $160,000 in San Francisco as per Indeed.

There is also a lot of exciting and rewarding opportunity exists in the Big Data world, and these courses will help you to understand those technologies and improve your understanding of the overall Hadoop ecosystem.

This is seriously the ultimate course on learning Hadoop and other Big Data technologies as it covers Hadoop, MapReduce, HDFS, Spark, Hive, Pig, HBase, MongoDB, Cassandra, Flume, etc.

In this course, you will learn to design distributed systems that manage a huge amount of data using Hadoop and related technology.

You will not only learn how to use Pig and Spark to create scripts to process data on the Hadoop cluster but also how to analyze non-relational data using HBase, Cassandra, and MongoDB.

It will also teach you how to choose an appropriate data storage technology for your application and how to publish data to your Hadoop cluster using high speed messaging solutions like Apache Kafka, Sqoop, and Flume.

You will also learn about analyzing relation data using Hive and MySQL and query data interactively using Drill, Phoenix, and Presto.

In total, it covers over 25 technologies to provide you with complete knowledge of Big Data space.

Processing billions of records are not easy, you need to have a deep understanding of distributed computing and underlying architecture to keep things under control, and if you are using Hadoop to do that job, then this course will teach you all the things you need to know.

As the name suggests, the course focuses on building blocks of the Hadoop framework, like HDFS for storage, MapReduce for processing, and YARN for cluster management.

In this course, first, you will learn about Hadoop architecture and then do some hands-on work by setting up a pseudo-distributed Hadoop environment.

You will submit and monitor tasks in that environment and slowly learn how to make configuration choices for stability, optimization, and scheduling of your distributed system.

At the end of this course, you should have complete knowledge of how Hadoop works and its individual building blocks like HDFS, MapReduce, and YARN.

If you don't know what Hive is,? Let me give you a brief overview. Apache Hive is a data warehouse project build on top of Apache Hadoop for providing data summarization, query, and analysis.

It provides a SQL-like interface to query data stored in the various database and file systems that integrate with Hadoop and NoSQL databases like HBase and Cassandra.

The course starts with explaining key Apache Hadoop concepts like distributed computing, MapReduce, and then goes into great detail into Apache Hive.

The course presents some real-world challenges to demonstrate how Hive makes that task easier to accomplish.

In short, an excellent course to learn how to use Hive query language to find the solution of common Big Data Problems.

If you are a beginner and want to learn everything about Hadoop and related technology, then this is the perfect course for you.

In this course, instructor Andalib Ansari will teach you the complex architecture of Hadoop and its various components like MapReduce, YARN, Hive, and Pig for analyzing big data sets.

You will not only understand what the purpose of Hadoop is and how it works but also how to install Hadoop on your machine and learn to write your own code in Hive and Pig to process a massive amount of data.

Apart from basic stuff, you will also learn advanced concepts like designing your own data pipeline using Pig and Hive.

The course also gives you an opportunity to practice with Big Data Sets. It is also one of the most popular Hadoop courses on Udemy, with over 24,805 students already enrolled and over 1000 ranges at an average of 4.2.

This is another great course to learn Big Data from Udemy. In this course instructor, Edward Viaene will teach you how to process Big Data using batch.

The course is very hands-on, but it comes with the right amount of theory. IT contains more than 6 hours of lectures to teach you everything you need to know about Hadoop.

You will also learn how to install and configure the Hortonworks Data Platform or HDP. It provides demons that you can try out on your machine by setting up a Hadoop cluster on the virtual machine. Though, you need 8GB or more RAM for that.

Overall, an excellent course for anyone who is interested in how Big Data works and what technologies are involved with some hands-on experience.

That's all about some of the best Udemy courses to learn about Hadoop and related technology like Hive, HDFS, MapReduce, YARN, Pig, etc. Hadoop is one of the most popular frameworks in the Big Data space, and a good knowledge of Hadoop will go a long way in boosting your career prospects, especially if you are interested in Big Data, an essential skill for the future along with Machine Learning and Artificial Intelligence.

Other Programming Resources You may like

Thanks for reading this article so far. If you like these best Hadoop and Big Data online training courses, then please share them with your friends and colleagues. If you have any questions or feedback, then please drop a note.

P. S. - If you are looking for some free courses to start your Big Data and Hadoop journey, then you can also check out this list of free Big Data, Hadoop, and Spark Courses for Programmers and Software Developers.

Btw, If you don't know, Hadoop is an open-source distributed computing framework for analyzing Big data, and it's been around for some time.

The classic map-reduce pattern which many companies use to process and analyze Big Data also runs on the Hadoop cluster. The idea of Hadoop is simple, to leverage a network of computers to process a massive amount of data by distributing them to each node and later combining individual output to produce the result.

Though MapReduce is one of the most popular Hadoop features, the Hadoop ecosystem is much more than that. You have HDFS, Yarn, Pig, Hive, Kafka, HBase, Spark, Knox, Ranger, Ambari, Zookeeper, and many other Big Data technologies.

Btw, why Hadoop? Why should you learn Hadoop? Well, it is one of the most popular skills in the IT industry today. The average salary for a Big Data developer in the US is around $112,00 per annum, which goes up to an average of $160,000 in San Francisco as per Indeed.

There is also a lot of exciting and rewarding opportunity exists in the Big Data world, and these courses will help you to understand those technologies and improve your understanding of the overall Hadoop ecosystem.

5 Best Udemy Courses to Learn Hadoop & Big Data in 2025

Without any further ado, here is my list of some of the best Hadoop courses you can take online to learn and master Hadoop and Big Data in 2025 and beyond.This is seriously the ultimate course on learning Hadoop and other Big Data technologies as it covers Hadoop, MapReduce, HDFS, Spark, Hive, Pig, HBase, MongoDB, Cassandra, Flume, etc.

In this course, you will learn to design distributed systems that manage a huge amount of data using Hadoop and related technology.

You will not only learn how to use Pig and Spark to create scripts to process data on the Hadoop cluster but also how to analyze non-relational data using HBase, Cassandra, and MongoDB.

It will also teach you how to choose an appropriate data storage technology for your application and how to publish data to your Hadoop cluster using high speed messaging solutions like Apache Kafka, Sqoop, and Flume.

You will also learn about analyzing relation data using Hive and MySQL and query data interactively using Drill, Phoenix, and Presto.

In total, it covers over 25 technologies to provide you with complete knowledge of Big Data space.

Processing billions of records are not easy, you need to have a deep understanding of distributed computing and underlying architecture to keep things under control, and if you are using Hadoop to do that job, then this course will teach you all the things you need to know.

As the name suggests, the course focuses on building blocks of the Hadoop framework, like HDFS for storage, MapReduce for processing, and YARN for cluster management.

In this course, first, you will learn about Hadoop architecture and then do some hands-on work by setting up a pseudo-distributed Hadoop environment.

You will submit and monitor tasks in that environment and slowly learn how to make configuration choices for stability, optimization, and scheduling of your distributed system.

At the end of this course, you should have complete knowledge of how Hadoop works and its individual building blocks like HDFS, MapReduce, and YARN.

3. SQL on Hadoop - Analyzing Big Data with Hive [Pluralsight]

It provides a SQL-like interface to query data stored in the various database and file systems that integrate with Hadoop and NoSQL databases like HBase and Cassandra.

The course starts with explaining key Apache Hadoop concepts like distributed computing, MapReduce, and then goes into great detail into Apache Hive.

The course presents some real-world challenges to demonstrate how Hive makes that task easier to accomplish.

In short, an excellent course to learn how to use Hive query language to find the solution of common Big Data Problems.

If you are a beginner and want to learn everything about Hadoop and related technology, then this is the perfect course for you.

In this course, instructor Andalib Ansari will teach you the complex architecture of Hadoop and its various components like MapReduce, YARN, Hive, and Pig for analyzing big data sets.

You will not only understand what the purpose of Hadoop is and how it works but also how to install Hadoop on your machine and learn to write your own code in Hive and Pig to process a massive amount of data.

Apart from basic stuff, you will also learn advanced concepts like designing your own data pipeline using Pig and Hive.

The course also gives you an opportunity to practice with Big Data Sets. It is also one of the most popular Hadoop courses on Udemy, with over 24,805 students already enrolled and over 1000 ranges at an average of 4.2.

5. Learn Big Data: The Hadoop Ecosystem Masterclass [Udemy course]

The course is very hands-on, but it comes with the right amount of theory. IT contains more than 6 hours of lectures to teach you everything you need to know about Hadoop.

You will also learn how to install and configure the Hortonworks Data Platform or HDP. It provides demons that you can try out on your machine by setting up a Hadoop cluster on the virtual machine. Though, you need 8GB or more RAM for that.

Overall, an excellent course for anyone who is interested in how Big Data works and what technologies are involved with some hands-on experience.

That's all about some of the best Udemy courses to learn about Hadoop and related technology like Hive, HDFS, MapReduce, YARN, Pig, etc. Hadoop is one of the most popular frameworks in the Big Data space, and a good knowledge of Hadoop will go a long way in boosting your career prospects, especially if you are interested in Big Data, an essential skill for the future along with Machine Learning and Artificial Intelligence.

Other Programming Resources You may like

- The 2025 Java Developer RoadMap

- 10 Advanced Java Courses for Experienced Programmers

- 10 Books Java Developers Should Read in 2025

- 5 Courses to Learn Python in 2025

- Top 5 Courses to become a full-stack Java developer

- 5 Data Science and Machine Learning Course for Programmers

- 5 React Native Courses for JavaScript Developers

- 5 Free Courses to learn Spring Boot and Spring MVC in 2025

- 5 Spring Microservice Courses for Java Developers

- 10 Things Java Developers Should Learn in 2025

- 5 Courses that can help you to become Scrum Master in 2025

- 10 Free Docker Courses for Java developer to learn DevOps

- 5 Free Jenkins and Maven Courses for Java Developers

Thanks for reading this article so far. If you like these best Hadoop and Big Data online training courses, then please share them with your friends and colleagues. If you have any questions or feedback, then please drop a note.

P. S. - If you are looking for some free courses to start your Big Data and Hadoop journey, then you can also check out this list of free Big Data, Hadoop, and Spark Courses for Programmers and Software Developers.

1 comment :

Useful one

Post a Comment